Mathematical Models of Information Operations

Abstract

The changes occurring in the contemporary world are related to the dynamics of the development of modern technologies and its impact on life of almost every person. Currently, as never before in human history, “everything is interconnected” through ICT (Information and Communication Technologies) networks and systems, creating the so-called cyberspace. Due to wide access to the Internet, social media started to play more significant role in the shaping of public opinion on basically every subject and to broadly impact the way of world perception by people, social groups or whole societies. Current studies presented in the paper, center, around the consistent model of information operations based on the theory of reflective control, disinformation theory and at the same time has regard to information diffusion models in network systems.

- Introduction

1.1 Key terms

Currently, as never before in human history, “everything is interconnected” through ICT (Information and Communication Technologies) networks and systems, creating the so-called cyberspace. The term “cyberspace” was coined by the American science fiction writer, William Gibson, who tackled the issue of the world dominated by omnipresent and very cheap advanced technology. It is difficult to talk about a universal definition of cyberspace. One of the most common is the definition from the publications of the US DoD (Department of Defense) [24], [25], e.g. “it is the space for creation, collection, processing and exchange of data, information and knowledge created by ICT systems and networks (including the Internet), together with external objects (e.g. users) interacting with such systems”. An important issue from the point of view of the topics discussed in this paper is the fact that cyberspace constitutes a main part of the information environment of modern man and, thanks to popularization of social media, it is treated as the new social sphere where “people meet”. The concept of cyberspace was popularized with the development of the Internet, and therefore, these terms are often used as synonyms.

Another important term is the situation awareness [26] defined as the “cyclical perception of the status of the information environment, the comprehension of the meaning of particular components of the information environment and the projection (forecast) of its future status on the basis thereof to take appropriate decisions, and hence, actions that have an impact on the new status of the information environment”. The concept of the situation awareness allows to define information operations as operations on the information environment in order to:

- [offensive perspective] interfere with the process of achieving situation awareness of the opponent and hence influencing the opponent’s decision’ making process;

- [defensive perspective] do not permit to interfere with the process of achieving one’s own situation awareness and hence making it impossible to influence one’s own decision-making process by the opponent.

1.2 The changing face of Warfare

The “wars of the future” will be mainly carried out in cyberspace, which constitutes a key element of the modern information environment. Additionally, the “traditional” cybernetic operations (CyberOps) will be more and more often synchronized with the information operations (InfoOps), creating de facto one collective operation in cyberspace. The battlefield will be information and data processing systems, thus, not only hard ICT infrastructure (CyberOps), but everything that is “connected” thereto, including people – i.e. “brains performing cognitive processes” (InfoOps). While awareness of technical threats is systematically raised and managed, awareness of the seriousness of threats at the information level is still insufficient.

1.3 Evolution of the Internet

The Internet, as many other breakthrough technologies, emerged in response to the needs of the US Armed Forces and was initially supposed to be distributed command & control system used for resisting mass physical attacks. From the army, the Internet has come a long way to universities, companies and finally became widely available to the people. The history of the Internet’s development, in terms of both technology and utility, is tremendously interesting. From the perspective of the issues discussed in this paper, it is necessary to indicate the milestones defined as – Web1.0, Web2.0 and Web3.0 – which allow us to understand the journey that the Internet has made in terms of technological development and its future destination. Therefore, in a nutshell and with some simplification, the subsequent “versions” of the Internet may be characterized in the following manner:

- Web1.0 is the read-only web, where a relatively narrow group of creators of content published on web portals; the rest of network users are “consumers” who may basically view content (sometimes with slight exceptions, e.g. adding comments by portal visitors). From a historical point of view, it is the oldest “culture” of using the Internet, even though still present, e.g. the majority of modern web portals with news have this character.

- Web2.0 is the read-write web, where the boundaries between the role of the “content creator” and “content consumer” are blurred. Web portals are replaced with web platforms, which create space for communications understood in a broader sense between the users that are both “content creators” and “content consumers”. Such “culture” of using the Internet is currently very common. On the basis of Web2.0 concept, many online auction platforms (e.g. eBay), social networking services (e.g. YouTube, Facebook, Twitter), Wikipedia and other widely used systems were created.

- Web3.0 is also the read-write web, being the upgraded version of Web2.0 thanks to artificial intelligence, aimed at ensuring better understanding of the needs and interests of web users. Web platforms migrate towards the “culture” of intelligent web platforms, thus, it is possible to recommend users “customized” content, with little or no effort on the part of such users. The content is customized by profiling users, which – as it turns out – has far-reaching consequences and becomes a sensitive issue.

- Web4.0 is the hypothetical “culture” of the Internet in the future, when all devices are actually connected to the global network as a result of dissemination of the concept known as the Internet of Things (IoT). The intelligent web platforms will be integrated into “one body”, allowing exchange of data, information and knowledge, without any present limitations (integration issues), including breakthrough in terms of possibilities provided by artificial intelligence understood as the transition from the current narrow artificial intelligence to the visionary concept of the general artificial intelligence. Finally, Web 4.0, defined as a symbiotic network, is supposed to eliminate all present obstacles in the man-machine communications, which are related to unnatural interfaces, e.g. keyboard, mouse. Contemporary visionaries (i.a. Ray Kurzweil presenting the concept of Singularity) predict that in the middle of the 21st century people will be able or even will have to connect their brains to computers. The projects allowing to connect a human brain to a computer are not fantasy. Successful research has been carried out for many years now, using, among other things, EEG (Electroencephalography) to communicate with paralyzed persons or to control movement of artificial limbs. Furthermore, a number of ambitious projects connecting brains with computers have been launched to not only control the device, but also to record “thoughts” on an external data carrier for various purposes. Such projects are developed by Elon Musk’s Neuralink and DARPA (Defense Advanced Research Project Agency).

In summary, the immersion into cyberspace is increasing, hence, the impact of cyberspace on a human being in the physical world is also growing. Therefore, we are moving towards the blurring of the boundaries between physical entities and their Avatars in cyberspace.

1.4 Web3.0 and its effects

Contemporary cyberspace users constitute nearly 60% of population (over 4.3 billion people). The majority of Internet users (over 3.4 billion) are active users of social media, who use mobile devices as the interface (over 3.2 billion). Currently, web platforms have become the norm, thus, the Internet reached Web3.0 level of “culture”, where many users are almost “permanently connected”. Being online has become a key need, in particular for the so-called digital natives – i.e. a generation of people who do not know the world without the Internet. However, the need for being online is also becoming more widespread among older generations that are trying to meet the expectations of the surrounding world, i.e. digital immigrants.

Social media, where – as already mentioned – “people meet”, constitute a fundamental element of the information society of modern man. For many users, social media are the only source of information about the surrounding world. The role of social media is currently invaluable as regards their influence on public opinion, perception of reality and views of individuals, social groups or even whole societies [2][6]. The problem is currently subject to public debate, which seems a bit late (which we cannot change now) and held mostly by and from the perspective of the aforementioned digital immigrants, using only qualitative studies.

The users of the Web3.0 “culture” generate large amounts of data and huge traffic on the Internet. To control such information noise as well as to find the “source of truth” and “source of falsehood” becomes a real challenge. Nonetheless, social media do have impact on how the majority of society derive the information, consume it and share it. It turns out that the process is very fast and in many cases blind, even mindless. Therefore, it is impossible to verify the information and its sources. Social media have transformed online communication into interactive dialog whose participants feel the need to express themselves and articulate “their” opinions and beliefs, seeking for approval or even acclaim. With the above in mind, it is difficult to have a merit-based discourse. The communication in social media is mainly based on emotions, which are stimulated by natural instincts, such as:

- confirmation bias – preferred contents confirm present opinions and beliefs;

- similarity attraction effect – establishing contacts with persons having similar opinions and beliefs.

Therefore, social media are full of the so-called social bubbles, i.e. communities with usually antagonistic opinions that tend to minimize mutual interactions related to the actual communication. What is more, the Web3.0 “culture” using intelligent web platforms creates for its users the so-called filter bubbles, which intensifies the aforesaid appearance of the social bubbles. Unbiased (as it may seem) and censor free interactive conversations in social media unexpectedly lead to unusual social polarization. In conclusion, the Web3.0 “culture” seems to be fertile ground for all types of manipulation of information and its users. What drives manipulation are the so-called information disorders disseminated in social media.

- Disinformation

2.1 Static approach

Currently, many research centers as well as public and private institutions are involved in the process of classification of information disorders. According to one of the general taxonomies, the information disorders may be classified in the following manner [23]:

- false, misleading information (mis-information);

- information causing damage/pain (mal-information);

- intentionally misleading information that may cause damage/pain (dis-information).

The subject of further interest is the so-called disinformation which refers to the information that may have characteristics of both mis-information and mal-information or just one of them. In qualitative terms, disinformation is defined as the information that may create an image of reality not necessarily in accordance with the facts. Depending on the intent to deceive [22], the types of disinformation may be as follows: satire/parody false connections misleading content false context imposter content manipulated content fully fabricated content. In quantitative terms, disinformation may be defined as the predefined amount of information aimed at creating the image of reality on a specific subject, including complete lack of information or information noise as well as flood of information causing information overload in recipients.

In light of the above, information disorders may be classified in different ways and take different forms, e.g. text, graphics, audio recordings, videos. As commonly understood, such information disorders are called fakes or fake news if they refer to current affairs. Fakes often seem to be true news, only after their analysis and verification of their source, it is possible to state that they are information disorders. On the other hand, deepfakes have become an absolute novelty and huge risk recently. While fakes are created entirely by people, of course using certain tools, such as text or graphics editors, deepfakes are generated automatically, using advanced algorithms, in particular machine learning algorithms, and more precisely – deep neural networks, image recognition and speech synthesis. Of course, some tools to automate the process for recognizing deepfakes are also designed.

Unfortunately, as already mentioned, the Web3.0 “culture” truly “discourages” users from critical thinking. Therefore, a system approach to eliminate fakes is essential, which is reflected, among other things, in the form of people or whole institutions responsible for controlling facts (fact checkers) at different levels.

2.2 Dynamic approach

Disinformation is also defined as the process of manipulation, intentional misleading, casting doubts about the facts, and using different types of information disorders. Therefore, disinformation is an offensive information operation, i.e. interfering with the process of obtaining situational awareness, which is aimed at creating a specific image of reality in the recipient, hence, making the recipient to take (or not to take) the decision in accordance with the assumptions of the center planning the information operation. Disinformation constitutes, in its essence, interference in the decision-making process of the recipient (object or group of objects), also by hiding certain events or information about such events or generating information noise to confuse the recipient. Therefore, the disinformation process is based on the information which is not always false but on the whole range of the previously defined information disorders. At this point, it should be mentioned that the disinformation process to a large extent resembles negotiations between the parties, marketing campaigns, including advertising and many other human activities. In this way the disinformation process occurs wherever people have incomplete or uncertain knowledge about the subject of consideration including architectural design and engineering optimization along with problems formulation, construction of computational methods and software development.

Advanced information operations are based on the previous recognition of the recipient (object or group of objects), to whom they are addressed. As a result of the recognition, the profiles are created, which are de facto parametrized recipient subjectivity models on the basis of which the recipient shapes their image of reality. The construction of the subjectivity models is a complex process, which may consist in the “profiling through active communication”. On the basis of the profiles, the attacker shapes the information environment of the attacked object to create the image of reality (in some way imposed by the attacker) in line with the goals of the attacker. Therefore, the attacker gains an informational advantage, which allows to influence the decision-making processes of the attacked object. It should be also mentioned that according to the above-described pattern, the contents are customized in the Web3.0 “culture” on the intelligent web platforms by way of user profiling. In this approach, the essence of the disinformation process is clearly visible and it is not only the dissemination of false information.

- Modeling of information operations

3.1 Theory of reflexive control

One of the most advanced mathematical models of information operations is the theory of reflexive control, developed in the Soviet Union by Vladimir Lefebvre [8], [9], [10], [11], in the 1960s. The Russian concept of the reflexive control is similar to the American concept of perception management, however, with significantly more developed formal apparatus and probably more advanced tools from the field of, among other things, psychology and social psychology. The starting point for the reflexive control process is to build a specific model of the object to be controlled. The object is usually a person, i.e. the object that thinks (is reflexive), hence, creates subjective images (models) describing the world, including their beliefs and desires.

The foundations of the construction of such a specific model are connected with the concept of reflexion understood as the ability to adopt a perspective of an observer of one’s own beliefs and desires. The so defined reflexion is the reflexion of the first kind or self-reflexion. The core of reflexion is its recursive character. The concept of reflexion was generalized by Lefebvre and, in the context of the reflective control, it is understood as the ability to adopt the perspective of the observer of one’s own and another reflective object’s beliefs and desires. The so defined reflexion is the reflexion of the second kind, which leads to the concept of the hierarchy of realities.

When building the model of the object subject to reflective control, it is of key importance to include the aforesaid concept of reflexion of the modeled object. Object X that wants to control object Y constructs the model of the world image (model) built by object Y at a specific level of the hierarchy of realities. On the basis of such a model, object X prepares information disorders dedicated to object Y, aimed at encouraging object Y to make the decision as expected by object X and in such a manner so that object Y is convinced that makes this decision independently and that the decision will be the best possible decision for object Y. The essence of reflective control is the change of approach from an attempt to predict the decision-making processes of the opponent on influencing to the decision-making processes of the opponent by using information disorders [5], [18].

If the process of reflexive control is executed secretly and object X properly “read” the subjective world image of object Y, then each portion of information disorders (marked as id in Fig. 2) sent from object X to object Y constitutes additional information for object X about object Y. It is also interesting that object Y may reverse the process so that it is possible to abandon the role of the controlled object and assume the role of the object controlling object X, while still confirming object X in its belief that it has full control of the reflexive control process.

3.2 Theory of disinformation

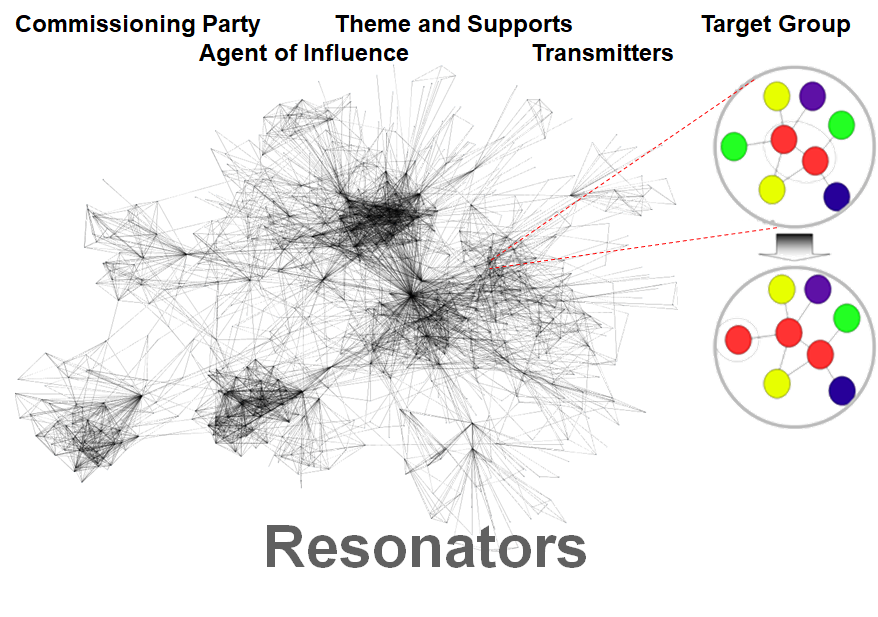

The modeling of information operations carried out in social media, the distinction between the controlling object and the controlled object is not sufficient. In such case, it is helpful to use the theory of disinformation developed by Vladimir Volkoff [20], according to which different actors playing certain roles in the disinformation process:

- Commissioning Party (Client) – a person or a group that derives benefits from the disinformation process;

- Agent of Influence – an entity executing the commissioned disinformation process;

- Theme (Leitmotif) – the main idée fixe being a specific narrative for the purpose of the disinformation process;

- Supports (Props) – events (true or false) that are “fuel” for the disinformation process;

- Transmitters (Carriers) – objects (including media) connected with the Commissioning Party or the Agent of Influence and intentionally propagating information disorders

- Resonators – objects (including media) not connected with the Commissioning Party or the Agent of Influence and unintentionally propagating information disorders;

- Target Group (Intended Audience) – a person or a group being the target of disinformation process.

In case of social media, many objects playing different roles participate in the disinformation process. What is also important is the fact that in this information environment, it is very easy to reach people who propagate disinformation on the basis of the Resonators. It is equally easy to obtain Transmitters via social bots and whole social botnets, which may be constructed in a relatively easy manner, with little technical knowledge, or which may be bought in the form of a service. Due to the Web3.0 “culture”, the so-called Resonators are widely available and have actual impact on the disinformation process; often because of their number, they may completely take the role of Transmitters.

The main issue in case of the modeling of information operations in social media is to understand the dissemination (diffusion) process of the phenomena in this environment [4], [5], including assessment of the role and significance of particular “nodes” in the network and forecast of diffusion dynamics and reach of the information being a product of the information operation. It should be also mentioned that there are fundamental differences at the model level and technical level of the disinformation process when the object of the information attack is a single object or a group of objects.

- Summary

Reality at the information level is more and more difficult to verify and easier to manipulate because of cyberspace. Due to wide access to the Internet, social media started to play more significant role in the shaping of public opinion on basically every subject and to broadly impact the way of world perception by people, social groups or whole societies. The awareness of risks at the information level is still limited.

The theory of reflective control facilitates the analysis of the decision-making process with respect to both own and the opponent’s decisions due to a possibility of manipulating the situation awareness. The theory enjoys quite a rich history and strong position among analysts of national security both in Russia and the United States. Many Western analysts see it as the Russian alternative to game theory [21]. At this point, it should be noted that the hypergame theory [1], [7], [13], [19], also referred to as metagames or higher-order games, which first appeared in the 1970s, may be perceived as the Western response to the theory of reflective control. Nowadays, the theory of reflective control is being developed – to a certain degree – independently by several research groups that propose a different formal apparatus to describe and analyze reflective control [12], [14], [15], [16], [17]. One of the branches may be the aforementioned hypergame theory. At the same time, information operations are also modeling using the traditional game theory [3].

References

[1] Bennett P., Toward a Theory of Hypergames, Omega 5, (1977) 749–751.

[2] Bond R, Fariss Ch., Jones J., Kramer A., Marlow C., Settle J. Fowler J., A 61-Million-Person Experiment in Social Influence and Political Mobilization, Nature 489, (2012) 295–298.

[3] Jormakka J., Mölsä J., Modelling Information Warfare as a Game, Journal of Information Warfare, Vol. 4, Issue 2, (2005) 12–25.

[4] Kasprzyk R., Diffusion in Networks, Journal of Telecommunications and Information Technology, No 2, (2012) 99–106.

[5] Kasprzyk R., The Essence of Reflexive Control and Diffusion of Information in the Context of Information Environment Security, Advances in Intelligent Systems and Computing, Springer, Vol. 835, (2019) 720–728.

[6] Kramer A., Guillory J., Hancock J., Experimental evidence of massive-scale emotional contagion through social networks, PNAS Proceedings of the National Academy of Sciences of the United States of America, 111(24), (2014) 8788–8790.

[7] Kovach N.S., Gibson A.S., Lamont G.B., Hypergame Theory: A Model for Conflict, Misperception, and Deception, Game Theory, Vol. 2015, Article ID 570639 (2015).

[8] Lefebvre V., Basic ideas of reflexive games logic, Problemy Issledovania Sistem i Structur, Moscow, AN USSR Press, (1965).

[9] Lefebvre V., Baranov P., Lepsky V., Internal Currency in Reflexive Games, Izvestia, AN USSR, Tekhnicheskaya Kibernetika, No. 4, (1969).

[10] Lefebvre V., Algebra of Conscience, second enlarged edition, Kluwer, Holland, (2001).

[11] Lefebvre V., Lectures on reflexive Game Theory, Leaf & Oaks Publisher, Los Angeles, USA, (2010).

[12] Novikov D., Chkartishvili A., Refleksivnye igry (Reflexive games), SINTEG, Moscow, (2003).

[13] Mateski M., Mazzuchi T., Sarkani S., The Hypergame Perception Model: A Diagrammatic Approach to Modeling Perception Misperception and Deception, Military Operations Research, Vol. 15, No 2, (2010) 21–37.

[14] Schreider Yu., Continously-valued logics Lefm as languages of reflexion, Nauchnotekhnicheskaya Informatsia, No 1–2 (1999).

[15] Taran T., Model of Reflexive Behavior in Conflict Situation, Journal of Computer and Systems Sciences International, No 1, (1998).

[16] Taran T., Many-valued Boolean Model of Reflexive Agent, Multi-Valued Logic, No 7 (2001).

[17] Taran T., Boolean models of reflexive control and their application for describing information warfare in social-economical systems, Avtomatika i Telemekhanika, No 11, (2004).

[18] Thomas T., Russia’s Reflexive Control Theory and the Military, Journal of Slavic Military Studies 17, (2004) 237–256.

[19] Trudolubov A., Decisions on dependency nets and reflexive polynomials”, VI Symposium po Kibernetike, Part III, Tibilisi, (1972).

[20] Volkoff V., Petite histoire de la désinformation, Les Editions du Rocher, (1999).

[21] Von Neumann J., Morgenstern O., Theory of Games and Economic Behavior, John Wiley and Sons, (1944).

[22] Wardle C., Fake news. It’s complicated”, https://firstdraftnews.com:443/fake-news-complicated/, (2017).

[23] Wardle C., Derakhshan H., Information Disorder: Toward an interdisciplinary framework for research and policymaking, Council of Europe, Vol. 9 (2017).

[24] JP 3-13, Cyberspace Operations, U.S. Joint Chiefs of Staff, 8 June 2018.

[25] JP 3-13, Information Operations, U.S. Joint Chiefs of Staff, 20 November 2014.

[26] https://www.definitions.net/definition/situation+awareness

Autor:

Ppłk dr inż. Rafał Kasprzyk, Wydział Cybernetyki, Wojskowa Akademia Techniczna

Absolwent Wydziału Cybernetyki WAT (Prymus Promocji – 2004 r., Prymus Akademii – 2005 r.) W 2012 r. z wyróżnieniem obronił rozprawę doktorską i uzyskał tytuł doktora nauk technicznych w dyscyplinie informatyka. W 2015 r. został uhonorowany przyznaniem trzyletniego Stypendium MNiSW dla Wybitnych Młodych Naukowców. Od 2018 roku jest kierownikiem Pracowni Modelowania i Analizy Cyberprzestrzeni na Wydziale Cybernetyki WAT. Główny obszar jego zainteresowań dotyczy modelowania, symulacji i analizy systemów sieciowych z wykorzystaniem modeli i metod badań operacyjnych, a w szczególności teorii grafów i sieci, technologii przetwarzania Big Data oraz wybranych elementów sztucznej inteligencji. Kierownik wielu projektów (m.in. CARE, COPE, CART, SAVE, THEIA, Manify) wielokrotnie wyróżnianych na Międzynarodowych Targach Wynalazczości (m.in. Bruksela, Paryż, Seul, Warszawa). Opiekun licznych zespołów studenckich zajmujących czołowe miejsca w kraju i na świecie w konkursach informatycznych.